Update: Into the Visual

November 17, 2025

Don't let my silence for the past three years fool you. I've been up to stuff. Some pretty different stuff, in fact. Here's how I moved from audio programming to visual programming to film photography over the past three years.

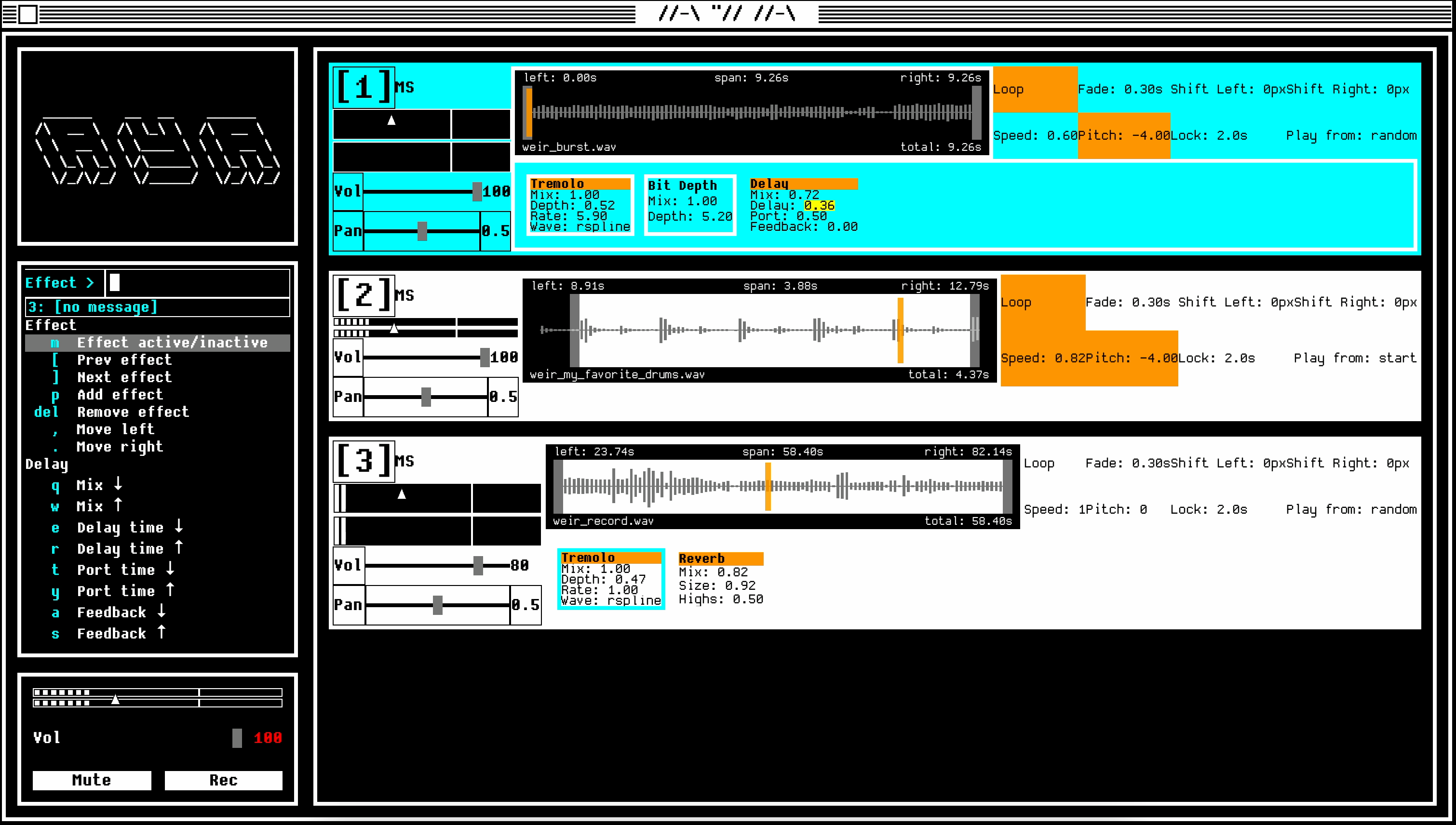

Buffer

Back in the fall of 2022 I was working on my Buffer program that allowed for real-time layering, looping, and modulating of audio inputs. I created a text-based user interface where control of the performance was done entirely through computer keyboard input.

Aya

In 2023 I took the Buffer concept further with my Aya Music System. The major step forward with Aya was that it featured a graphical user interface built with PyQt. It allowed me to activate effects modules, chain them together, and reorder the modules in the chain on the fly.

Anim

In February of 2024 I had to present on a new software platform my work was implementing. I was so tired of dry presentations. I wanted to have some fun with it. I ended up creating a Google Slides presentation with a ridiculous animation sequence using GIFs harvested from the internet. I "performed" this animation live by triggering the events with mouse clicks synchronized to music. This animation sequence took me hours to create, and I had to rehearse it many times to get the timing correct.

Right away I began thinking, "Surely I should be able to create a program to perform and improvise these kinds of sequences in real-time." That was the birth of my Anim program. Once again I used Python and PyQt for building the GUI. The animated GIFs were sourced from Giphy and gifmovies.com, which sadly doesn't seem to exist anymore.

With Anim I could control various parameters in real-time such as speed, zoom, skew, rotation, transitions, and masking shapes. Behind it all were randomization algorithms that determined which specific GIFs would be played throughout the performance. I could improvise a performance in real time and Anim would export the performance as a video file.

Mosh

I soon grew frustrated with the limitations of looped animated GIFs. I wanted to play with longer, non-looping video clips, but what would I do with them? A particular kind of video glitch I'd seen over the years came to mind. With this glitch a video would freeze on one shot and the motion of a different shot would take over, as though the second shot was wearing the skin of the first shot. You'll sometimes see this glitch when skipping ahead in a streaming video online.

After some research I found that this kind of glitch, when used for artistic purposes, is called datamoshing. Two popular examples of it are A$AP Mob's "Lamborghini High" and Chairlift's "Evident Utensil" music videos. Those are pretty awesome, but the video that really stunned me was Takeshi Murata's Monster Movie.

Datamoshing is all about manipulating the data of a video file in order to cause visual artifacts when the file is decompressed during playback. With datamoshing I had to get deep into the weeds of how video codecs like MPEG2 and H264 work. Ramiro Polla's open source FFglitch project is a brilliant framework for generating datamoshed videos, and Daz Disley's dd_GlitchAssist offers clever algorithms for manipulating motion vectors.

I created Mosh, which wasn't really a program but more of a script that harnessed FFglitch to automate the generation of datamoshed videos. The first large datamoshing piece I did was Planet of the Earth. I made the piece for a concert organized by musician and composer Brian Johnson as part of a Southeastern Minnesota Arts Council grant. The piece premiered in front of a small audience and was accompanied by a musical improvisation by Brian Johnson on guitar, Martha Larson on cello, Aria Peters on violin, and Bill Kautz on trumpet. The video above is a rearranged and shortened version of the original piece.

The next piece, Fun, was made in collaboration with my nieces and nephew at a summer cabin. I shot it on my iPhone and stitched it all together with datamoshing techniques. The final edit was set to "Crooks Like Children" by Fievel is Glauque.

Videography

My next datamoshing piece took me into the realm of videography. It came about when my artist friend Jade Hoyer invited me to contribute a video piece to her upcoming exhibition at Concordia College. The theme was on native and invasive species. I decided to shoot video of the plant life in the arboretums and neighborhoods of Northfield. However, unlike with Fun, this time I wanted to get higher quality footage with a proper video camera. I checked out a Sony A7siii from the library where I work.

The trouble was I didn't know the first thing about videography. Thus began a new learning curve. Lenses, focal length, aperture, ISO, shutter speed, exposure, dynamic range, camera work, shooting in raw, color grading, etc.

I called the resulting piece The eventual breeding range. The name was generated by a script I wrote that algorithmically combined syntactically related words from Charles S. Elton's book The Ecology of Invasions by Animals and Plants from 1958. The eventual breeding range consists of a sequence of 50 algorithmically processed and edited video pieces. The total running time is 1h 52m 34s. The video above is a compilation of excerpts from the piece. You might recognize the music from my Aya Music System above.

Film Photography

The seed for my interest in film photography might have been planted in the summer of 2024 when my wife, Kallie, asked for a Canon AE-1 Program for her birthday. A talented digital photographer, she now wanted to try film photography. I bought her the camera and some film, but I wasn't too curious about it at that point. However, while working on my videography projects a couple months later I learned that some people like to shoot with vintage manual lenses on their digital mirrorless cameras. And so I fell down that rabbit hole.

As I researched vintage camera lenses I would often come upon reviews of the lenses by film photographers. In one of these videos, a guy demonstrated the technique of multiple exposures. That grabbed my attention from a creative standpoint. In my mind, exploiting a camera's capacity for multiple exposures toward creative ends was similar to exploiting digital video's capacity for datamoshing toward creative ends.

It wasn't long before I dove into film photography. At first I used the Canon AE-1 Program I had gotten Kallie, but soon I picked up a Nikon FE for myself. Even though the multiple exposure technique was the impetus for getting into this, I quickly realized that it was enough of a creative challenge just to take a well-exposed, well-composed, well-focused, well-developed, and well-digitized photo.

I've been shooting film for over a year now. I'm currently on roll #61. I've developed, scanned, and edited each roll myself at home. That includes black and white film and C-41 color film. You can find a selection of my photographs on the Photo pages and on my Instagram. I've also recently begun printing photos in the darkroom I set up in my basement.

Going Forward and Back

When I last posted here three years ago I didn't expect to develop a visual creative practice. That's the fun thing about honoring your curiosity and inspiration. It leads to such surprising places if you let it. This whole journey into the visual the past two years has showed me that my creative impulses are not strictly aural.

My first post six years ago was about how I never expected to learn computer programming and develop a musical coding practice. Now I find myself at a similar point regarding my video and photography practice.

And yet my creative life isn't a linear path forward never looking back. I've recently started a new music coding project with a friend in town. It's so exciting to return to music coding after two years focused on visual projects. I've missed it.